The exponential rise of Large Language Models (LLMs) like Google's Gemini or OpenAI's GPT has revolutionized industries, transforming how businesses interact with technology and customers. However, this has brought with it a new set of challenges in itself. Such is the scale that OWASP released a separate categories list of possible vulnerabilities on LLMs. As outlined in our previous blogs, one of key vulnerabilities in LLMs is Prompt Injection.

In the evolving landscape of AI-assisted security assessments, the performance and accuracy of large language models (LLMs) are heavily dependent on the clarity, depth, and precision of the input they receive. Prompts act as the bread and butter for LLMs—guiding their reasoning, refining their focus, and ultimately shaping the quality of their output. When dealing with complex security scenarios, vague or minimal inputs often lead to generic or incomplete results, whereas a well-articulated, context-rich prompt can extract nuanced, actionable insights. Verbiage, in this domain, is not just embellishment—it’s an operational necessity that bridges the gap between technical expectation and intelligent automation. Moreover, it's worth noting that the very key to bypassing or manipulating LLMs often lies in the same prompting skills—making it a double-edged sword that demands both ethical responsibility and technical finesse. From a security perspective, crafting detailed and verbose prompts may appear time-consuming, but it remains the need of the hour.

"PenTestPrompt" is a tool designed to automate and streamline the generation, execution, and evaluation of attack prompts which would aid in the red teaming process for LLMs. This would also add very valuable datasets for teams implementing guardrails & content filtering for LLM based implementations.

The Problem: Why Red Teaming LLMs is Critical

Prompt injection attacks exploit the very foundation of LLMs—their ability to understand and respond to natural language and are one of the most critical vulnerabilities. For instance: -

- An attacker could embed hidden instructions in inputs to manipulate the model into divulging sensitive information.

- Poorly guarded LLMs may unintentionally provide harmful responses or bypass security filters.

Manually testing these vulnerabilities is a daunting task for penetration testers, requiring significant time and creativity. The key questions are: -

- How can testers scale their efforts to identify potential prompt injection vulnerabilities?

- How to ensure complete coverage in terms on context and techniques of prompt injection?

LLMs are especially good at understanding and generating natural language text and thus why not leverage their expertise for generating prompts which can be used to test for prompt injection?

This is where "PenTestPrompt" helps. It unleashes the creativity of the LLMs for intelligently/contextually generating prompts that can be submitted to applications where prompt injection is to be tested for. Internal evaluation has shown that it significantly improves the quality of prompts and drastically reduces the time required to test, making it simpler to detect, report and fix a vulnerability.

What is "PenTestPrompt"?

"PenTestPrompt" is a unique tool that enables users to: -

- Generate highly effective attack prompts with the context of the application - based on the application functionality and potential threats

- Allows to automate the submission of generated prompts to target application

- Leverages API key provided by user to generate prompts

- Logs and analyzes responses using customizable keywords

Whether you're a security researcher, developer, or organization safeguarding an AI-driven solution, "PenTestPrompt" streamlines the security testing process for LLMs specially to uncover prompt injection vulnerability.

With "PenTestPrompt", the entire testing process can become automated as the key features are: -

- Generate attack prompts targeting the application

- Automate their submission to the application models’ API

- Log and evaluate responses and export results

- Download only the findings marked as vulnerable by response evaluation system or download the entire log of request-response for further analysis (logs are downloaded as CSV for ease in analysis)

How Does "PenTestPrompt" Work?

"PenTestPrompt" offers a Command-Line Interface (CLI) as well as a Streamlit-based User Interface (UI). There are mainly three core functionalities: – Prompt Generation, Request Submission & Response Analysis. Below is detailed description for all three phases: -

1. Prompt Generation

This tool is completely configurable with pre-defined instructions based on the experience in prompting for security. It supports multiple model providers (like Anthropic, Open AI etc.) and models that can be used with your own API key through a configuration file. The tool allows to generate prompts for pre-defined prompt bypass techniques/attack types through pre-defined system prompts for each technique and also allows to modify the system instruction provided for this generation. It also takes the context of the application to gauge performance of certain types of prompts for a particular type of application.

Take an example, where a tester is trying for "System Instruction/Prompt Leakage" with various methods like obfuscation, spelling errors, logical reasoning etc. – the tool will help generate X number of prompts for each bypass technique so that the tester can avoid writing multiple prompts manually for each technique.

2. Request Submission

For end-to-end testing and scaling, once we have generated X number of prompts, the tester also needs to submit the prompts to the application functionality. This is what the second phase of the tools helps with.

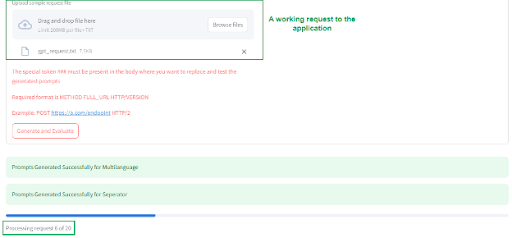

It allows the tester to upload a requests.txt file, containing the target request (the request file must be a latest call to the target application with an active session) and a replaced parameter (with a special token "###") in the request body where the generated prompts are to be embedded. The tool will automatically send the generated prompts to the target application, and log the responses for analysis. A sample request file should look like -

The tool directly submits the request to the application by replacing the generated prompts in the request one after other and capture all request/responses in a file.

3. Response Evaluation

Once all request/responses are logged to a file, this phase allows evaluation of responses using a keyword-matching mechanism. Keywords, designed to identify unsafe outputs, can be customized to fit the security requirements of the application by simply modifying the keywords file available in the configuration. The tester can choose to view results only flagged as findings, only error requests or the combined log. This facilitates easier analysis.

Below, we see a sample response output.

With the above functionalities, this tool allows everyone to explore, modify and scale their processes for prompt injection and analysis. This tool is built with modularity in mind – each and every component, even those pre-defined by experience, can be modified and configured to suit the use case of the person using the tool. As they say, the tool is as good as the person configuring and executing it! This tool allows onboarding new model providers & models, writing new attack techniques, modifying the instructions for better context and output and listing keywords for better analysis etc.

Conclusion

As LLMs continue to transform industries, it is very important to keep on enhancing their security. "PenTestPrompt" is a game-changer in the realm of scaling red teaming efforts for prompt injection and implementation of guardrails & content filtering for LLM based implementations. By automating the creation of attack prompts that are contextual and evaluating model responses, it empowers testers/developers to focus on what truly matters—identifying and mitigating vulnerabilities.

Ready to revolutionize your red teaming process or guard-railing LLMs? Get started with "PenTestPrompt" today and download a detailed User Manual to know the technicalities!